Models¶

Panoptic Head is a pytorch module that implements a network to connect with the output of a Detr-based model. This new module is able to predict a segmentation features, represented by a binary mask for each object predicted by Detr model.

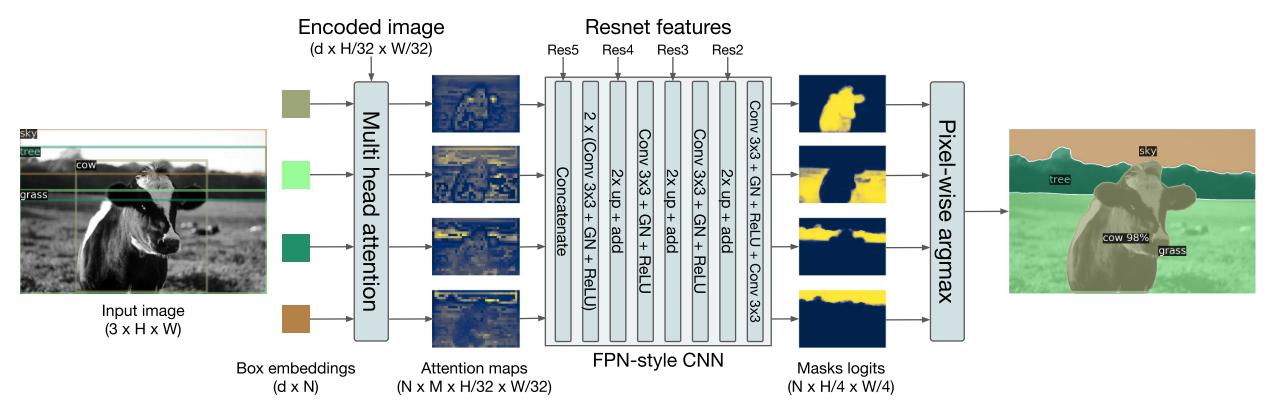

Block diagram of panoptic head model, taken from End-to-End Object Detection with Transformers paper¶

See also

Mask object to know the data representation of predictions.

Basic usage¶

Given that detr_panoptic implements the Panoptic Head for a Detr-based models, first the module

have to implement and be passed as PanopticHead parameter:

from alonet.detr import DetrR50 from alonet.detr_panoptic import PanopticHead detr_model = DetrR50() model = PanopticHead(DETR_module=detr_model)

If you want to finetune from the model pretrained on COCO dataset, a DetrFinetune models must be used:

from alonet.detr import DetrR50Finetune from alonet.detr_panoptic import PanopticHead detr_model = DetrR50Finetune(num_classes=250) model = PanopticHead(DETR_module=detr_model)

To run an inference:

from aloscene import Frame device = model.device # supposed that `model` is already defined as above # read image and preprocess image with Resnet normalization frame = Frame(IMAGE_PATH).norm_resnet() # create a batch from a list of images frames = aloscene.Frame.batch_list([frame]) frames = frames.to(device) # forward pass m_outputs = model(frames) # get boxes and MASK as aloscene.BoundingBoxes2D and aloscene.Mask from forward outputs pred_boxes, pred_masks = model.inference(m_outputs) # Display the predicted boxes frame.append_boxes2d(pred_boxes[0], "pred_boxes") frame.append_segmentation(pred_masks[0], "pred_masks") frame.get_view([frame.boxes2d, frame.segmentation]).render()

Important

PanopticHead network is able to predict the segmentation masks, follow by each box predicted for the

Detr-based models. Is for this reason that inference function return a new output: pred_masks.

Panoptic head API¶

Panoptic module to use in object detection/segmentation tasks.

- class alonet.detr_panoptic.detr_panoptic.PanopticHead(DETR_module, freeze_detr=True, aux_loss=None, device=device(type='cpu'), weights=None, strict_load_weights=True)¶

Bases:

torch.nn.modules.module.ModulePytorch head module to predict segmentation masks from previous boxes detection task.

- Parameters

- DETR_module

alonet.detr.detr Object detection module based on

DETRarchitecture- freeze_detrbool, optional

Freeze

DETR_moduleweights in train procedure, by default True- aux_loss: bool, optional

Return aux outputs in forward step (if required), by default use

DETR_module.aux_lossattribute value- devicetorch.device, optional

Configure module in CPU or GPU, by default

torch.device("cpu")- weightsstr, optional

Load weights from name project, by default None

- strict_load_weightsbool

Load the weights (if any given) with strict =

True(by default).

- DETR_module

- INPUT_MEAN_STD = ((0.485, 0.456, 0.406), (0.229, 0.224, 0.225))¶

- forward(frames, get_filter_fn=None, **kwargs)¶

PanopticHead forward, that joint to the previous boxes predictions the new masks feature.

- Parameters

- frames

Frames Input frame to network

- get_filter_fnCallable

Function that returns two parameters: the

dec_outputstensor filtered by a boolean mask per batch. It is expected that the function will at least receiveframesandm_outputsparameters as input. By default the function used to this purpuse isget_outs_filter()from based model.

- frames

- Returns

- dict

It outputs a dict with the following elements:

pred_logits: The classification logits (including no-object) for all queries. Shape = [batch_size x num_queries x (num_classes + 1)]pred_boxes: The normalized boxes coordinates for all queries, represented as (center_x, center_y, height, width). These values are normalized in [0, 1], relative to the size of each individual image (disregarding possible padding). See PostProcess for information on how to retrieve the unnormalized bounding box.pred_masks: Binary masks, each one to assign to predicted boxes. Shape = [batch_size x num_queries x H // 4 x W // 4]bb_outputs: Backbone outputs, requered in this forwardenc_outputs: Transformer encoder outputs, requered on this forwarddec_outputs: Transformer decoder outputs, requered on this forwardpred_masks_info: Parameters to use in inference procedureaux_outputs: Optional, only returned when auxilary losses are activated. It is a list of dictionnaries containing the two above keys for each decoder layer.

- inference(forward_out, maskth=0.5, filters=None, **kwargs)¶

Given the model forward outputs, this method will return a set of

BoundingBoxes2DandMask, with its correspondingLabelsper object detected.- Parameters

- forward_outdict

Dict with the model forward outputs

- maskthfloat, optional

Threshold value to binarize the masks, by default 0.5

- filterslist, optional

List of filter to select the query predicting an object, by default None

- Returns

BoundingBoxes2DBoxes from DETR model

MaskBinary masks from PanopticHead, one for each box.

- training: bool¶

- alonet.detr_panoptic.detr_panoptic.main(image_path)¶

DetrR50 Panoptic Finetune¶

Module to create a custom PanopticHead model using

DetrR50 as based model, which allows to upload a decided pretrained weights and

change the number of outputs in class_embed layer, in order to train custom classes.

- class alonet.detr_panoptic.detr_r50_panoptic_finetune.DetrR50PanopticFinetune(num_classes, background_class=None, base_model=None, base_weights='detr-r50-panoptic', freeze_detr=False, weights=None, *args, **kwargs)¶

Bases:

alonet.detr_panoptic.detr_panoptic.PanopticHeadPre made helpfull class to finetune the

DetrR50and use a pretrainedPanopticHead.- Parameters

- num_classesint

Number of classes in the

class_embedoutput layer- background_classint, optional

Background class, by default None

- base_modeltorch.nn, optional

Base model to couple PanopticHead, by default

DetrR50- base_weightsstr, optional

Load weights from original

DetrR50+PanopticHead, by default “detr-r50-panoptic”- freeze_detrbool, optional

Freeze

DetrR50weights, by default False- weightsstr, optional

Weights for finetune model, by default None

- Raises

- ValueError

weightsmust be a ‘.pth’ or ‘.ckpt’ file

- training: bool¶